Q.1- List down all the Models of SDLC.

Ans- here's a short explanation of each SDLC model-

1. Waterfall Model: Sequential approach where development flows steadily downwards through phases like requirements, design, implementation, testing, and maintenance.

2. V-Model (Verification and Validation Model): Corresponds each stage of development with a respective testing phase, ensuring verification and validation at every step.

3. Agile Model: Iterative and flexible approach that emphasizes collaboration, customer feedback, and continuous improvement throughout development cycles.

4. Scrum: Agile framework characterized by short, iterative development cycles called sprints, with regular meetings (daily scrums) to assess progress and adapt as needed.

5. Spiral Model: Combines iterative development with elements of the waterfall model, focusing on risk analysis and addressing high-risk aspects early in the process.

6. Iterative Model: Repeatedly cycles through development phases, allowing for refinement and improvement with each iteration based on feedback.

7. Prototype Model: Involves building an initial, simplified version of the software to gather user feedback and refine requirements before proceeding with full development.

8. RAD (Rapid Application Development) Model: Emphasizes rapid prototyping and iterative development, aiming to deliver software quickly by using pre-built components and intensive user involvement.

9. Incremental Model: Divides the software into small, manageable increments, with each increment adding new functionality or improvements to the previous one.

10. DevOps Model: Integrates development and operations teams closely to automate and streamline the software delivery process, fostering collaboration and continuous delivery.

Q.2- What is STLC? Also, Explain all stages of STLC.

Ans-The Software Testing Life Cycle (STLC)

The Software Testing Life Cycle (STLC) is a systematic approach to testing a software application to ensure that it meets the requirements and is free of defects. It is a process that follows a series of steps or phases, and each phase has specific objectives and deliverables.

The STLC is used to ensure that the software is of high quality, reliable, and meets the needs of the end-users. The main goal of the STLC is to identify and document any defects or issues in the software application as early as possible in the development process. This allows for issues to be addressed and resolved before the software is released to the public.

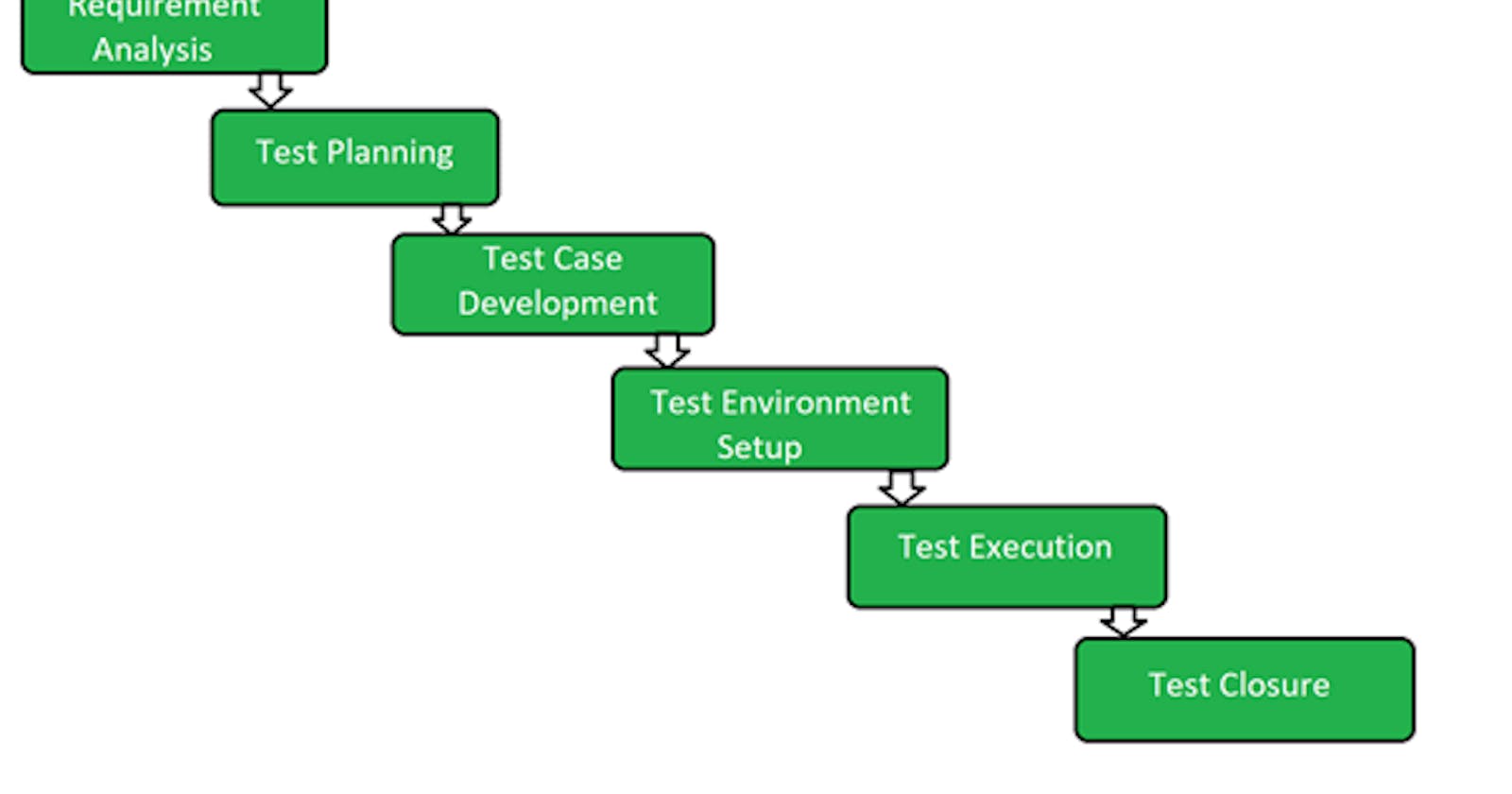

The stages of the STLC include Test Planning, Test Analysis, Test Design, Test Environment Setup, Test Execution, Test Closure, and Defect Retesting. Each of these stages includes specific activities and deliverables that help to ensure that the software is thoroughly tested and meets the requirements of the end users. Overall, the STLC is an important process that helps to ensure the quality of software applications and provides a systematic approach to testing.

It allows organizations to release high-quality software that meets the needs of their customers, ultimately leading to customer satisfaction and business success.

Phases of STLC

Requirements ↓

.................Analysis ↓

.............................Design ↓

.......................................Development ↓

.............................................................. Testing ↓

............................................................................Implementation ↓

...................................................................................................Maintenance

1. Requirement Analysis:

Requirement Analysis is the first step of the Software Testing Life Cycle (STLC). In this phase quality assurance team understands the requirements like what is to be tested. If anything is missing or not understandable then the quality assurance team meets with the stakeholders to better understand the detailed knowledge of requirements.

The activities that take place during the Requirement Analysis stage include:

• Reviewing the software requirements document (SRD) and other related documents

• Interviewing stakeholders to gather additional information

• Identifying any ambiguities or inconsistencies in the requirements

• Identifying any missing or incomplete requirements

• Identifying any potential risks or issues that may impact the testing process

Creating a requirement traceability matrix (RTM) to map requirements to test cases

At the end of this stage, the testing team should have a clear understanding of the software requirements and should have identified any potential issues that may impact the testing process. This will help to ensure that the testing process is focused on the most important areas of the software and that the testing team is able to deliver high-quality results.

2. Test Planning:

Test Planning is the most efficient phase of the software testing life cycle where all testing plans are defined. In this phase manager of the testing, team calculates the estimated effort and cost for the testing work. This phase gets started once the requirement-gathering phase is completed.

The activities that take place during the Test Planning stage include:

• Identifying the testing objectives and scope

• Developing a test strategy: selecting the testing methods and techniques that will be used

• Identifying the testing environment and resources needed

• Identifying the test cases that will be executed and the test data that will be used

• Estimating the time and cost required for testing

• Identifying the test deliverables and milestones

• Assigning roles and responsibilities to the testing team

• Reviewing and approving the test plan

At the end of this stage, the testing team should have a detailed plan for the testing activities that will be performed, and a clear understanding of the testing objectives, scope, and deliverables. This will help to ensure that the testing process is well-organized and that the testing team is able to deliver high-quality results.

3. Test Case Development:

The test case development phase gets started once the test planning phase is completed. In this phase testing team notes down the detailed test cases. The testing team also prepares the required test data for the testing. When the test cases are prepared then they are reviewed by the quality assurance team.

The activities that take place during the Test Case Development stage include:

• Identifying the test cases that will be developed

• Writing test cases that are clear, concise, and easy to understand

• Creating test data and test scenarios that will be used in the test cases

• Identifying the expected results for each test case

• Reviewing and validating the test cases

• Updating the requirement traceability matrix (RTM) to map requirements to test cases

At the end of this stage, the testing team should have a set of comprehensive and accurate test cases that provide adequate coverage of the software or application. This will help to ensure that the testing process is thorough and that any potential issues are identified and addressed before the software is released.

4. Test Environment Setup:

Test environment setup is a vital part of the STLC. Basically, the test environment decides the conditions on which software is tested. This is independent activity and can be started along with test case development.

In this process, the testing team is not involved. either the developer or the customer creates the testing environment.

5. Test Execution:

After the test case development and test environment setup test execution phase gets started. In this phase testing team starts executing test cases based on prepared test cases in the earlier step.

The activities that take place during the test execution stage of the Software Testing Life Cycle (STLC) include:

• Test execution: The test cases and scripts created in the test design stage are run against the software application to identify any defects or issues.

• Defect logging: Any defects or issues that are found during test execution are logged in a defect tracking system, along with details such as the severity, priority, and description of the issue.

• Test data preparation: Test data is prepared and loaded into the system for test execution

• Test environment setup: The necessary hardware, software, and network configurations are set up for test execution

• Test execution: The test cases and scripts are run, and the results are collected and analyzed.

• Test result analysis: The results of the test execution are analyzed to determine the software’s performance and identify any defects or issues.

• Defect retesting: Any defects that are identified during test execution are retested to ensure that they have been fixed correctly.

• Test Reporting: Test results are documented and reported to the relevant stakeholders. It is important to note that test execution is an iterative process and may need to be repeated multiple times until all identified defects are fixed and the software is deemed fit for release.

6. Test Closure:

Test closure is the final stage of the Software Testing Life Cycle (STLC) where all testing-related activities are completed and documented.

The main objective of the test closure stage is to ensure that all testing-related activities have been completed and that the software is ready for release. At the end of the test closure stage, the testing team should have a clear understanding of the software’s quality and reliability, and any defects or issues that were identified during testing should have been resolved.

The test closure stage also includes documenting the testing process and any lessons learned so that they can be used to improve future testing processes Test closure is the final stage of the Software Testing Life Cycle (STLC) where all testing-related activities are completed and documented.

The main activities that take place during the test closure stage include:

• Test summary report: A report is created that summarizes the overall testing process, including the number of test cases executed, the number of defects found, and the overall pass/fail rate.

• Defect tracking: All defects that were identified during testing are tracked and managed until they are resolved.

• Test environment clean-up: The test environment is cleaned up, and all test data and test artifacts are archived.

• Test closure report: A report is created that documents all the testing-related activities that took place, including the testing objectives, scope, schedule, and resources used

• Knowledge transfer: Knowledge about the software and testing process is shared with the rest of the team and any stakeholders who may need to maintain or support the software in the future.

• Feedback and improvements: Feedback from the testing process is collected and used to improve future testing processes

as a test lead for a web-based application, it's essential to identify potential risk factors that could impact the testing process and the overall success of the project. Here's a list of potential risk factors along with their explanations that you might include in the test plan:

Q.3- As a test lead for a web-based application, your manager has asked you to identify and explain the different risk factors that should be included in the test plan. Can you provide a list of the potential risks and their explanations that you would include in the test plan?

Ans-

1. Performance Risks:

- Explanation: Performance issues such as slow loading times, high server response times, or inadequate scalability can negatively impact user experience and result in loss of customers.

2. Security Risks:

- Explanation: Vulnerabilities such as insufficient data encryption, weak authentication mechanisms, or inadequate protection against common web attacks (e.g., SQL injection, cross-site scripting) can compromise sensitive user data and damage the reputation of the application.

3. Compatibility Risks:

- Explanation: Incompatibility with various web browsers (e.g., Chrome, Firefox, Safari), operating systems (e.g., Windows, macOS, Linux), or devices (e.g., desktops, tablets, mobile phones) may lead to inconsistent user experiences and reduced usability.

4. Integration Risks:

- Explanation: Failure to integrate with third-party systems, APIs, or external services (e.g., payment gateways, social media platforms) can disrupt critical functionalities and hinder the application's overall functionality.

5. Data Integrity Risks:

- Explanation: Risks related to data accuracy, consistency, and reliability, including data loss, corruption, or unauthorized access, which can lead to incorrect decision-making and legal implications.

6. Usability Risks:

- Explanation: Poor user interface design, unintuitive navigation, or lack of accessibility features can result in user frustration, decreased adoption rates, and increased support costs.

7. Regression Risks:

Explanation: Changes or updates to the application codebase may inadvertently introduce defects or regressions in previously working functionalities, impacting overall system stability and reliability.

8. Resource Risks:

Explanation: Insufficient availability of testing resources (e.g., hardware, software, skilled personnel) or delays in obtaining necessary resources can lead to project delays, increased costs, and compromised testing quality.

9. Scope Creep Risks:

- Explanation: Uncontrolled expansion of project scope, including addition of new features or changes in requirements, can lead to schedule overruns, budget overruns, and decreased overall project quality.

10. Communication Risks:

- Explanation: Ineffective communication among team members, stakeholders, or external partners can lead to misunderstandings, missed requirements, and delays in issue resolution, impacting project timelines and quality.

Q.4-Your TL (Team Lead)has asked you to explain the difference between quality assurance (QA) and quality control (QC) responsibilities. While QC activities aim to identify defects in actual products, your Test Lead is interested in processes that can prevent defects. How would you explain the distinction between QA and QC responsibilities to your boss?

Answer-

while quality assurance (QA) focuses on preventing defects by implementing processes and standards, quality control (QC) involves identifying and correcting defects in products or deliverables through inspections, reviews, and testing. QA is proactive and strategic, aiming to improve processes continuously, while QC is reactive and focuses on detecting and addressing defects that have already occurred. Both QA and QC are essential components of ensuring overall product quality and customer satisfaction

1. Quality Assurance (QA):

QA focuses on preventing defects before they occur by implementing processes, standards, and methodologies.

QA activities involve establishing quality standards, defining processes, and ensuring that the entire development lifecycle adheres to these standards.

QA is proactive and strategic, aiming to improve processes continuously to prevent defects from arising in the first place.

Examples of QA activities include developing and implementing quality management plans, conducting process audits, and providing training to team members on best practices.

1. Example for Quality Assurance (QA):

- QA Example: Implementing a robust code review process where developers systematically review each other's code before it is merged into the main codebase. This process ensures that coding standards are followed, potential defects are identified early, and knowledge sharing among team members is facilitated. By establishing and enforcing this process, QA aims to prevent defects from entering the codebase in the first place, thereby improving overall code quality and reducing the likelihood of issues later in the development lifecycle.

2. Quality Control (QC):

QC involves activities aimed at identifying defects in products or deliverables through inspections, reviews, and testing.

QC activities occur during or after the development process and focus on detecting and correcting defects to ensure that the final product meets quality standards.

QC is reactive and focuses on identifying and addressing defects that have already occurred.

Examples of QC activities include conducting software testing (such as functional testing, regression testing, and performance testing), performing code reviews, and inspecting deliverables for compliance with specifications.

2. Example for Quality Control (QC):

QC Example: Conducting thorough regression testing before a software release to ensure that new changes or features have not introduced any unintended side effects or regressions in existing functionalities. Regression testing involves systematically retesting previously tested features to verify that they still function correctly in the updated version of the software. By identifying and fixing any defects found during regression testing, QC helps ensure that the final product meets quality standards and delivers a consistent user experience, thereby reducing the risk of releasing defective software to customers.

Q.5 Difference between Manual and Automation Testing?

Answer -

1. Execution Method:

Manual Testing: Tests are performed manually by human testers without the use of automation tools. Testers interact directly with the application's user interface to validate its functionalities.

Example: A tester manually clicks through a web application to verify that all links are working correctly.

2. Speed:

Manual Testing: Relatively slow due to the manual execution of test cases, as human testers need time to perform each step.

Automation Testing: Relatively fast due to the automated execution of test scripts, as tests can be run simultaneously on multiple machines.

Example: Automating regression tests for a web application allows running hundreds of test cases in a fraction of the time it would take manually.

3. Human Intervention:

Manual Testing: Requires human intervention for test execution, as testers need to manually execute each test case and observe the results.

Automation Testing: Requires minimal human intervention once test scripts are set up, as tests are executed automatically without manual intervention.

Example: In manual testing, a tester needs to verify each step of a login process by entering credentials manually. In automation testing, the login process can be automated using scripts to enter predefined credentials and verify successful login.

4. Initial Investment:

Manual Testing: Low initial investment as it primarily requires human resources for test execution.

Automation Testing: Higher initial investment due to the need for automation tools, infrastructure setup, and scripting efforts.

Example: Manual testing of a small-scale web application may only require hiring a few testers. In contrast, automation testing of the same application would require investing in testing tools, frameworks, and infrastructure setup.

5. Reusability:

Manual Testing: Limited reusability of test cases, as test scenarios need to be executed manually each time.

Automation Testing: High reusability of test scripts, as automated tests can be reused across different builds and environments.

Example: A manual test case for verifying the checkout process of an e-commerce website needs to be executed manually for each release. However, an automated test script for the same checkout process can be reused across multiple releases without manual intervention.

6. Maintenance:

Manual Testing: Manual tests may require frequent updates and maintenance as the application evolves or new features are added.

Automation Testing: Automated tests may require less maintenance once set up correctly, but scripts may need updates if there are significant changes in the application.

Example: If the UI of a web application undergoes changes, manual tests that rely on specific UI elements may need to be updated accordingly. Similarly, automated test scripts may need adjustments to accommodate UI changes.

7. Regression Testing:

Manual Testing: Time-consuming for repetitive regression testing, as testers need to manually execute regression test cases for each release.

Automation Testing: Efficient for repetitive regression testing, as automated tests can be rerun quickly for each release.

Example: Performing regression testing manually for a software update involves retesting all existing functionalities. In automation testing, regression test suites can be executed automatically after each code change to ensure that existing functionalities are not affected.

8. Complexity Handling:

Manual Testing: Better suited for testing complex scenarios that require human judgment and intuition, such as exploratory testing or usability testing.

Automation Testing: May struggle with testing scenarios that involve human-like judgment or decision-making, as automated tests follow predefined scripts.

Example: Testing the usability of a mobile application by manually simulating user interactions and observing user experience requires human judgment, making it suitable for manual testing. However, automating such tests to evaluate subjective aspects of user experience may be challenging.

9. User Experience Testing:

Manual Testing: Effective for subjective testing such as user experience evaluation, as human testers can provide qualitative feedback based on personal interaction with the application.

Automation Testing: Less effective for subjective testing such as user experience evaluation, as automated tests focus on objective validation of functionalities.

Example: A manual tester can provide feedback on the intuitiveness of navigation or the clarity of user interface elements based on personal interaction with the application. However, automated tests can only verify objective aspects such as the presence of UI elements or the correctness of user inputs.

A table outlining the differences between manual and automation testing along with examples for each:

| Aspect | Manual Testing | Automation Testing |

| Definition | Testing conducted manually by human testers. | Testing conducted using automated testing tools/scripts. |

| Execution Speed | Relatively slow due to manual execution. | Relatively fast due to automated execution. |

| Human Intervention | Requires human intervention for test execution. | Requires minimal human intervention once set up. |

| Initial Investment | Low initial investment as it primarily requires human resources. | Higher initial investment due to tools and infrastructure setup. |

| Reusability | Limited reusability of test cases. | High reusability of test scripts. |

| Maintenance | Manual tests may require frequent updates and maintenance as the application evolves. | Automated tests may require less maintenance once set up correctly. |

| Regression Testing | Time-consuming for repetitive regression testing. | Efficient for repetitive regression testing. |

| Complex Scenarios | Better suited for testing complex scenarios that require human judgment and intuition. | May struggle with testing scenarios that involve human-like judgment. |

| User Experience Testing | Effective for subjective testing such as user experience evaluation. | Less effective for subjective testing such as user experience evaluation. |

| Example | Manually clicking through a web application to test its functionalities. | Using Selenium WebDriver to automate the testing of web application functionalities by scripting test scenarios. |